Just-in-Time (JIT) Coding: When Software Stops Being Written and Starts Being Generated On-the-fly

Most code today is static. We write it, package it, deploy it, run it, and then keep running the same old artifact until we eventually muster up the energy to update it.

But this era is ending.

We are entering a world where most software will be generated on demand, and where specifications become the true source code. The implementations we grew up writing aren’t the primary product anymore - they’re byproducts.

The spec is the program, code is just exhaust.

The Long Arc: 1.0 → 2.0 → 3.0

For forty years, software meant one thing: deterministic instructions executed exactly as written.

Software 1.0

Static code + compilers + manual logic.

Software 2.0

Neural networks where we program by example, not instruction.

Software 3.0 (Phase 1): Vibe Coding

The LLM era we’re living through now as of Nov 2025 - “vibe coding”.

We use AI assistants to generate code, write specs, build glue, and accelerate development. But we still deploy code as static artifacts.

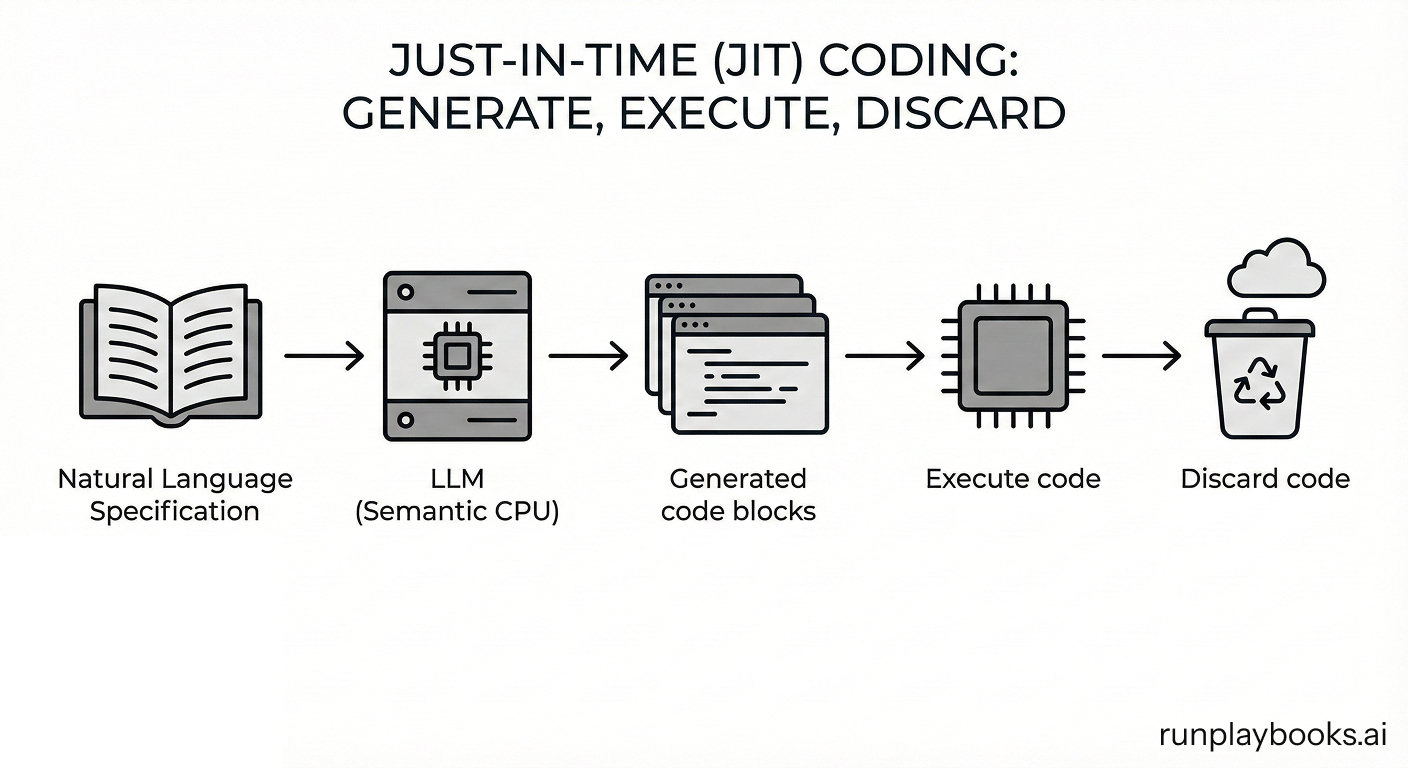

Software 3.0 (Phase 2): JIT Coding

Here, the switch flips:

- We don’t ship final code.

- We ship specifications.

- The system interprets and generates code at the moment of execution.

- Every situation gets its own optimized, contextual, highly specific implementation.

- And behavior evolves automatically as the models evolve.

This is the natural end state of Software 3.0.

Why JIT Coding? Because Static Software Is a Poor Model of Intelligence

Static code is rigid by design. It encodes one-size-fits-all behavior, which proves to be brittle when it meets the complexities of the real world.

- Customer issues differ in subtle ways.

- Business logic changes based on conditions and context.

- Workflows adapt with time, environment, and nuance.

- Human operators improvise when rules fail.

Traditional software cannot improvise; it can only execute a predefined path. Agentic loops are fluid, but no guarantees that they will follow the business rules we specified in prompts.

To bring true reasoning, planning, adaptability, and situational awareness into software execution, we need a new model, one where:

- the system understands the goal,

- interprets context,

- generates the exact code needed,

- executes it,

- and discards it.

You don’t need a universal solution. You need a solution generated specifically for the moment.

JIT coding delivers exactly that: human-level fluidity in machine-level execution.

The Human Analogy: How We Follow Instructions

Humans don’t carry around prewritten instruction sets.

When faced with a new situation such as a tricky conversation, a broken tool, an unexpected detour, the brain does not retrieve a precompiled block of logic. It implicitly generates action-plans on the fly, shaped by context, intent, and high-level goals, and executes them through neural dynamics.

In the brain, the “code” is never explicitly instantiated; it simply unfolds over time.

This is why human behavior is flexible, how we handle novelty effortlessly. And this is what JIT coding enables: software that generates highly contextual code precisely when it is needed.

The similarity is not just a metaphor, it is the essence of intelligence.

Why Today’s Agent Frameworks Fall Short

Today’s agent frameworks and LLM-enabled workflows (LangGraph, AutoGen, CrewAI, AgentOS, etc.) all share a foundational limitation:

They are Software 1.0 execution engines wearing Software 3.0 hats.

Their pattern is:

- write static code,

- call LLMs as helpers,

- glue everything together through rigid control flows,

- hope nothing breaks.

This produces systems that are:

- brittle,

- hard to debug,

- difficult to evolve,

- dependent on complex plumbing, and

- treat LLM reasoning/planning as an afterthought.

They barely take advantage of what LLMs can do today. They definitely cannot take advantage of new model capabilities without rewriting everything.

They were built with fixed assumptions about what LLMs can and cannot do. So when GPT-6 or Claude 5 shows up and is now able to execute more sophisticated instructions natively, static systems like these can’t leverage those advances without refactoring the entire codebase.

JIT systems sidestep this completely. They evolve automatically. Their behavior flows directly from model capabilities. Forward compatibility is built-in!

The Determinism Question

A natural question emerges:

If systems generate code on demand, how can they be reliable?

The key is recognizing what determinism actually means in software. We already accept nondeterminism in many places: thread scheduling, distributed systems, network timing, GPU kernels, speculative execution. We don’t insist on controlling every machine instruction; we insist that the system’s behavior matches its specification. As long as the outcome adheres to the intended semantics, we’re satisfied. JIT coding follows the same principle: preserve determinism where it matters (in the semantics) and allow flexibility where it doesn’t (in the ephemeral implementation).

1. Determinism lives in the specification

What we truly care about is that the specification is stable, testable, version-controlled, and unambiguous. This becomes the behavioral anchor for the system.

2. Semantic compilation ensures consistent interpretation

A lower-level semantic representation (akin to an assembly language for meaning) guarantees consistent interpretation. Nearly all variability is eliminated at compile time, leaving just enough semantic fluidity for the system to adapt at runtime.

3. Most generated code is “trivial”

Because semantic compilers emit fine-grained instructions and strict constraints, the generated Python becomes straightforward, schema-constrained, and statically checkable immediately before execution. This isn’t creative coding - it’s controlled translation.

4. Current agent systems are already nondeterministic - just unmanaged

Every LLM call introduces variability, and today’s frameworks have no principled way to shape it, constrain it, or benefit from it. They inherit nondeterminism without control.

JIT coding doesn’t add nondeterminism. It channels it, constrains it, and makes it safe - while enabling intelligent behavior.

If you demand determinism in the implementation, you block intelligence. Instead, demand determinism in the semantic intent and you can get both reliability and adaptability.

Hyper-Specialized Software

Traditional software gives every user the same implementation.

JIT systems do the opposite and produce software on-the-fly:

- per user

- per request

- per situation

Every execution becomes a unique use case, optimized for the exact context.

The result:

- tailored workflows

- logic that adapts to specifics

- edge cases handled naturally

- minimal branching logic

- no unreliable agent loops

Software stops being a brittle static artifact. It becomes a fluid, expressive, intelligent system.

A Bold Prediction

As JIT systems scale, they will generate software at a rate that dwarfs even vibe-coded development:

Future systems will generate more JIT code in an hour than engineers write in a decade,.

The specification becomes the real artifact. The code becomes ephemeral.

Start Using JIT Coding Today

Playbooks is an agent framework built on JIT coding from first principles.

Here’s the flow -

First, developers write natural-language specification as Playbooks programs, mixing in regular Python where appropriate.

Then, these specifications are semantically compiled into a low-level, natural-language intermediate form (Playbooks Assembly Language).

During execution, Playbooks runtime

- takes a portion of the compiled spec,

- asks an LLM to generate Python for that portion in context,

- validates and executes that Python on the same call stack as regular code,

- and discards it afterward.

Specification → semantic assembly → ephemeral code → execution

Example: Playbooks Program

Here is an excerpt from a Hello World playbooks program.

- Greet the user with a hello playbooks message

- Tell the user that this is a demo for the playbooks system

- Say goodbye to the user

- End program

Compiled Assembly

This is an excerpt from a compiled Hello World program.

- 01:QUE Say(user, Greet the user with a hello playbooks message)

- 02:QUE Say(user, Tell the user that this is a demo for the playbooks system)

- 03:QUE Say(user, Say goodbye to the user)

- 04:YLD for exit

Example JIT-generated Python

# execution_id: 1

await Step("HelloWorldDemo:01:QUE")

await Say("user", "Hello! Welcome to the Playbooks system demo!")

await Step("HelloWorldDemo:02:QUE")

await Say("user", "This is a demonstration of the playbooks system in action.")

await Step("HelloWorldDemo:03:QUE")

await Say("user", "Thank you for trying this demo. Goodbye!")

await Step("HelloWorldDemo:04:YLD")

await Yld("exit")

This is what true Software 3.0 looks like. Playbooks treats LLMs as semantic CPUs, executing natural-language programs directly.

The Future of Software

Static code will continue to exist, but increasingly only as the substrate on which intelligent systems run.

Above it emerges a new layer where:

- intent is the true program,

- LLMs act as semantic processors,

- implementations are generated on demand,

- and software behaves more like an employee than a machine.

This is how intelligent software will ultimately work.

Software stops being written. It starts being generated - continuously, fluidly, intelligently.

Once you see this shift clearly, there is no going back.